By David Rawlinson and Gideon Kowadlo

Jeff's new Temporal Pooler

This is article 2 in a 3 part series about Temporal Pooling (TP) in MPF/CLA-like algorithms. You can read part 1

here. For the rest of this article we will assume you've read part 1.

This article is about the

new TP proposed by Jeff Hawkins. The original TP was described in the

CLA white paper. We will also assume you've at least had a quick read of the linked articles. Despite our best efforts this article is only an interpretation of those methods, and it may not be entirely correct or as Numenta intended.

Separation of internal and external causes

The first topic in Hawkins' proposal covers the possible roles of specific cortical layers and the separation of internal and external causes.

Hawkins suggests that cortical layers 3,4,5 and 6 are all implementing variants of the same algorithm, with minor differences. He also suggests that each layer is performing all functions (Spatial Pooling, Sequence Memory and Temporal Pooling. In higher hierarchy levels, Spatial Pooling may be absent). In CLA, these 3 components are implemented as a matrix of sequence-memory cells. Rules concerning how and when the cells activate each other in different input contexts implement the pooling and prediction features.

Hawkins also states that one distinction between layers 3 and 4 may be that cells in layer 4 are using copies of motor actions (internal causes), to predict better. In consequence, cells in layer 3 are left trying to predict the actions that layer 4 could not predict, i.e. relying more on historically-observed

sequential patterns of activation. Although both layers will learn sequential patterns of activation, layer 3 will rely more heavily on history. External causes will more often generate input that can’t be explained by motor actions, so we might expect layer 3 to more often respond to external events.

This article will not discuss these ideas any further. We note them for clarity and to distinguish our focus. Instead, we will talk about how the new CLA TP could be used to construct a hierarchical representation of changes in input patterns over time.

Temporal Slowness

Both old and new temporal poolers exploit a principle known as "

Temporal Slowness", which means that output activity varies more slowly than input activity. You can read more about this general principle

here.

Another, related feature of the HTM and CLA temporal poolers is that they emit a constant pattern of cell activity to generate stability. This is achieved by marking cells as "active" regardless of whether they are active via prediction or via feedfoward input. Although active-by-prediction and active-by-FF-input are distinguished within the region for learning purposes, this distinction is not visible to the next higher region in the hierarchy.

Old Temporal Pooler Cell Activity

For reference, this is an outline of the TP functionality in the "old" TP from the Numenta CLA white paper.

The diagrams in this article each have 3 parts. At the top is a graph showing a fragment (in graph terminology, a component) of the Sequence Memory encoded by the cells in the region. Arrows show the learnt transitions between cells. Below the graph is a series of observations (marked "FF input") and the corresponding pattern of cell activity when each Feed-Forward (FF) input is observed. Each column represents a single cell from the sequence memory and its activity over time. Each row in the lower part of the diagrams shows all cells' activity at one moment. Cells are filled white when they are active and black when not active:

|

| Figure 1: Spatial pooler and temporal pooler cell activity in the original CLA white paper. This image compares two patterns of cell activity over time, shown left and right. The left subfigure shows cell activity in spatial pooler cells, where the active cell[s] are the ones whose input bits most closely match current FF input. The right subfigure shows the original CLA temporal pooling method, where cells become active when predicted far in advance of their FF input being observed, and remain active until after the associated FF input is observed. In this example, ⅔ of the active cells are identical after each FF input change. A ‘P’ denotes cells activated due to prediction. Each subfigure has 3 parts. The main part is a matrix showing sequence memory cell activity over time. Each row is one time-step, numbered 1 to 5. The FF input observed at each time step is shown in a column to the left. The top row shows a fragment of Sequence Memory formed by the cells, and the colours each cell responds to. In this simple example, the Sequence Memory graph is simply a sequence of states that are always observed in the same order. |

Spatial Pooler cell activity is easiest to explain (figure 1, left). In figure 1, we see that the Sequence Memory has learnt that the colours Red, Yellow, Green, Blue and Black occur in order. One cell responds uniquely to each of these colours, creating the diagonal line of active cells over time. Each cell is only active for the duration of its FF input being observed.

The original temporal pooler premise was to drive cells to an active state via prediction (cells active via prediction are marked with a 'P') as early as possible. The cells would then remain active until either the prediction was no longer made (e.g. due to observation of an unexpected FF input pattern) or the cell becomes active via its FF input.

Time and Stability

You can see in the figure above that in a sequence of predictable inputs each cell is active over a period of 3 input changes (the only meaningful way to measure time in these examples). So let's assume each input change is one time step.

The cells are shown to be active for an arbitrary period of time - to keep the diagrams simple the minimum period is shown. In reality a fixed activation period is unlikely; it will depend on the activation or prior cells in the sparse distributed representation. However, it is still possible to make the point that at any time during a predictable sequence, a set of cells is active. Most of those cells are not changing between time steps and will be active after the next FF input change. This is the temporal pooler in action.

In the example above, each cell is predicted up to 2 steps before the corresponding input is observed. Therefore, in a predictable sequence 3 cells are simultaneously active, 2 of them due to prediction and one due to FF input.

Although the output of the temporal pooler is continuously changing, most of the active cells are not changed between inputs. In this case, 2/3 of the output is stable. With longer activation of TP cells, a larger fraction of the output becomes stable.

Noisy recognition and resource constraint assumptions

To simplify the problem observed by the next level in the hierarchy it is necessary to have cell activity changes in the lower level without corresponding cell activity changes in the higher level. Given that the TP output is continuously changing, how do we sometimes avoid cell activity changes in the higher level?

There are two parts to the answer. First, all cells' FF input are

Sparse Distributed Representations (SDRs). These are large sets of input bits, of which only a few are active at any given time. The Spatial Pooler in CLA recognises FF inputs when only a fraction of the expected (synapsed) input bits are active. For example, a cell may become active from FF input when 80% of its FF input bits are active - any 80%. The set of active input bits can change while the cell is active. This means that cells' recognition is tolerant to noisy FF input.

Noisy recognition of TP output from lower hierarchy levels is one assumption necessary for increasing stability in higher levels. But this assumption is actually a useful feature, allowing classification to be tolerant to noise.

The other necessary assumption is a resource-constraint. If an infinite supply of cells were available, then after much slow learning every FF input pattern would have a dedicated cell (due to inhibition between cells). Cell activity changes would occur throughout the hierarchy after every input change, no matter how tiny. Obviously, resource constraints are physically necessary.

The finite size of a CLA region ensures that there aren't enough cells to represent each FF input pattern perfectly. Instead, some similar (probably successive) FF inputs will be represented by the same set of active cells (quantization error).

These are both very reasonable assumptions, but worth stating and understanding.

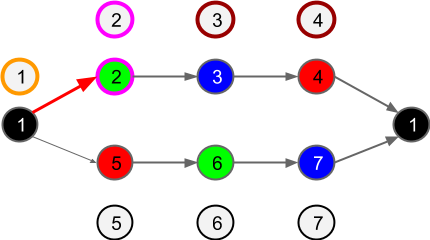

New Temporal Pooler Cell Activity

The new TP proposes that cells are only counted as active when confirmed by observation of the corresponding FF input, and that they stay active for a period of time after this:

|

| Figure 2: Spatial pooler and temporal pooler cell activity as described by the new TP method. Each TP cell is active for a period of time after its corresponding FF input is observed. There may be no distinct SP or TP cells, but we show them separately to illustrate differences in activation behaviour. |

This in itself doesn't change much, but because we are now building patterns forwards we can represent unpredicted events more accurately. Cells are no longer active until the corresponding FF input is observed or prediction is cancelled; instead they are never fully activated prior to the corresponding FF input. When a prediction error occurs, the results are immediate and lasting. Given noisy recognition of FF input, the old method would be more likely to have hidden prediction failures.

Temporal pooling “replaces” graph components (specifically sequences of vertices) with a single vertex that represents the component by being constantly active for the duration of those inputs. It is also worth noting that to simplify any graph, the minimum number of vertices in each replaced sequence is 3. In a temporal pooler, this means that the minimum number of FF input changes for which a predicted cell must remain active is 3. If temporal pooler output is constant for sequences of length 2, the next hierarchy level will encode transitions between cells instead of sequences of cells (i.e. no simplification or effective pooling has occurred).

Activity after a Successful Prediction

The new TP proposes that there are two cortical layers of cells. One layer of cells embodies the Spatial Pooler. The other layer forms the TP. In the TP layer, cells remain active for a long time after being successfully predicted in the SP layer, but for only a short time when not predicted in the SP layer.

Prediction failures will occur regularly, whenever there are multiple future states and the available data does not allow the correct future to be determined. This looks like a fork in the Sequence Memory graph:

|

| Figure 3: In this non-deterministic sequence, Red is followed by Yellow or Green. When the prior Red is observed, Yellow is predicted. Since it was predicted, the Yellow cell stays active for 3 steps in total. Since Blue always follows Yellow and Green, and Black follows Blue, the other cells are all active for the full 3 steps. |

In the example above, the Yellow cell is successfully predicted leading to a long activation of the sequence memory cell that responds to Yellow after Red.

Activity after a Failed Prediction

The new TP proposes that in the event of a failed prediction, cells only remain active briefly. This is shown in the example below, where Yellow was predicted but Green was observed:

|

| Figure 4: New TP cell activity after a failed prediction. Yellow was predicted but Green was observed. |

The pattern of activity after a failed prediction is initially different to the pattern after a correct prediction, with only a short activation of the Green cell and no full activation of the predicted cell at all. This means that now, cells are only active when their corresponding FF input is actually observed.

The FF output of the TP after a prediction failure is quite different to the FF output during predictable sequences before and after. This helps to ensure that the unpredictable transition is modelled in higher hierarchy levels, passing the problem up the hierarchy rather than obscuring it. We anticipate that higher levels of the hierarchy will have the ability to understand and hence predict the problematic transition.

Analysis

Prediction with/without motor output distinguishes Cortex layers 3,4

Copying motor actions back to the cortex to help with prediction makes sense, especially in lower hierarchy levels. However, recent motor signals become increasingly irrelevant when trying to predict more abstract, longer term events. For example, getting sacked from your job is less likely due to the way you just now sipped your coffee, and more likely to do with some events that happened days or weeks ago. These older events will be hierarchically represented as more abstract causes.

At higher hierarchy levels, with greater abstraction, the "motor actions" that are necessary to explain & predict events are not simple muscle contractions, but complex sequences of decisions and behaviour with specific intents and expectations. The predictive data encoded in the Feed-Forward Indirect and Feed-Back (FB) pathways contains this data in a form that is appropriate and meaningful at each level of the hierarchy. If predictions and decisions are synonymous, then we can treat selected predictions as if they were actions.

For these reasons we are skeptical about the idea that the use of motor actions to aid prediction is enough to distinguish the functionality of different cortical layers. However, in support of the idea, layer 4 does disappear in higher hierarchy levels where motor actions would be less relevant.

Propagation of uncertainty to higher regions

The way that uncertainty (as prediction failure) is propagated up the hierarchy is vital to being able to reliably assemble a useful hierarchical representation of FF input. In fact, we believe that unpredictable events should be propagated until they reach a level of abstraction and invariance where they become predictable (see the

Newtonian world assumption in the previous post). Therefore, we believe TP output should be highly orthogonal to prior and subsequent output in the event of a prediction failure. In the case of an SDR, highly orthogonal means that many bits should have dissimilar activity (a small intersection between the sets of active bits before and after).

Only a fraction of synapsed input bits are needed to activate a cell, and therefore CLA features “noise-tolerant” recognition of FF input. Only a few output bits would be dissimilar between the outcomes of prediction success and failure. This seems to raise the risk that unpredictable events could be “hidden” by noise-tolerance, and not passed up the hierarchy for higher levels to solve. From the perspective of a higher level, the set of active cells has not been significantly affected by their failure to predict.

Some loss of uncertainty in propagation may be acceptable in a sufficiently complex system. These are toy examples with only a few cells, whereas real CLA regions have hundreds or thousands of cells. However, we are working through some simple examples to try to better understand the behaviour and limits of the CLA.

Another detail that is not fully described in Hawkins’ current TP proposal is how long cells should be active when predicted. Maximum stability is achieved when cells are active for long periods, but we are limited by the conflicting objective to not hide uncertainty. Should we truncate activity when other prediction failures occur? In the next article we will propose explicitly making TP cells active unless uncertainty is too high, thereby implementing an auto-tuning of activation period.

Representations of random sequences

There is one comment in Hawkins' proposal that we disagree with. He says: "One of the key requirements of temporal pooling is that we only want to do it when a sequence is being correctly predicted. For example, we don't want to form a stable representation of a sequence of random transitions." In fact, it may be necessary to build a framework of some random sequences, in order to build sufficiently complex representations to explain any of the simpler events. Although the random sequences may not be the right ones, we need to have a mechanism of assembling more complex hierarchical representations even when there is no incremental explanatory power in doing so (this was discussed in the previous article). This would mean looking for structure in randomness, on the assumption that it would eventually be worthwhile due to explanatory models in higher levels of the hierarchy.

Summary

To wrap up:

- Feed-Back or Feed-Forward indirect pathway data may be a more appropriate source of data than motor actions in the FF direct pathway, for predicting events based on internal causes at higher levels of abstraction

- Reliable propagation of uncertainty (prediction failure) up the hierarchy is critical to move unexplained events to a level of abstraction where they can be understood

- We would like to extend activity period for maximum stability, balanced against the desire to avoid hiding prediction errors. How this is done is not detailed

- It may be necessary to perform temporal pooling even when there are no predictable patterns, in order to construct higher-order representations that may be able to predict the simpler events.

The next and final article in our 3 part series will present some specific alternative temporal pooler ideas.

.png&container=blogger&gadget=a&rewriteMime=image%2F*)

.png)