|

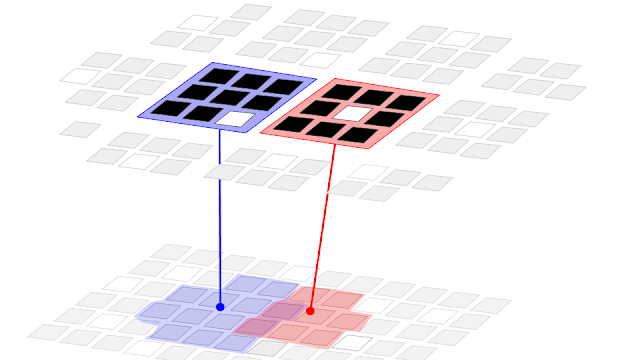

| Figure 1: Our interpretation of the Thalamocortical system as 3 interacting sub-systems (objective, subjective and executive). The structure of the diagram indicates the dominant direction of information flow in each system. The objective system is primarily concerned with feed-forward data flow, for the purpose of building a representation of the actual agent-world system. The executive system is responsible for making desired future agent-world states a reality. When predictions become observations, they are fed back into the objective system. The subjective system is a circular because its behaviour depends on internal state as much as external. The subjective system builds a filtered, subjective model of observed reality, that also represents objectives or instructions for the executive. This article will describe how this model fits into the structure of the Thalamocortical system. |

Authors: David Rawlinson and Gideon Kowadlo

This is part 4 of our series on how to build an artificial general intelligence (AGI).

- Part 1: An overview of hierarchical general intelligence

- Part 2: Reverse engineering (the physical perspective - cells and layers - and the logical perspective - a hierarchy).

- Part 3: Circuits and pathways; we introduced our canonical cortical micro-circuit and fitted pathways to it.

In this article, part 4, we will try to interpret all the information provided so far. We will try to fit what we know about the biological general intelligence to our theoretical expectations.

Systems

We believe cortical activity can be usefully interpreted as 3 integrated systems. These are:

- Objective system

- Subjective system

- Executive system

So, what are these systems, why are they needed and how do they work?

Objective System

We theorise that the purpose of the objective system is to construct a hierarchical, generative model of both the external world and the actual state of the agent. This includes internal plans & goals already executed or in progress. From our

conceptual overview of General Intelligence we think that this representation should be

distributed, compositional and therefore robust and able to immediately model novel situations instantly and meaningfully.

The objective system models varying timespans depending on the level of abstraction, but events are anchored to the current state of the world and agent. Abstract events may cover long periods of time - for example, “I made dinner” might be one conceptual event.

We propose that the objective system is implemented by pyramidal cells in layers 2/3 and by

spiny excitatory cells in layer 4. Specifically, we suggest that the purpose of the spiny excitatory cells is primarily dimensionality reduction, by performing a classifier function, analogous to the ‘Spatial Pooling’ function of

Hawkins’ HTM theory. This is supported by analysis of C4 spiny stellate connectivity:

“... spiny stellate cells act predominantly as local signal processors within a single barrel...”. We believe the pyramidal cells are more complex and have two functions. First, they perform dimensionality reduction by requiring a set of active inputs on specific apical (distal) dendrite branches to be simultaneously observed before the apical dendrite can output a signal (an action potential). Second, they use basal (proximal) dendrites to identify the sequential context in which the apical dendrite has become active. Via a local competitive process, pyramidal cells learn to become active only when observing a set of specific input patterns in specific historical contexts.

The output of pyramidal cells in C2/3 is routed via the Feed-Forward Direct pathway to a

“higher” or more abstract cortical region, where it enters in C4 (or in some parts of the Cortex,

C2/3 directly). In this “higher” region, the same classifier and context recognition process is repeated. If C4 cells are omitted, we have less dimensionality reduction and a greater emphasis on sequential or historical context.

We propose these pyramidal cells only output along their axons when they become active without entering a “predicted” state first. Alternatively, interneurons could play a role in inhibiting cells via prediction to achieve the same effect. If pyramidal cells only produce an output when they make a False-Negative prediction error (i.e. they fail to predict their active state), output is equivalent to Predictive Coding (

link,

link). Predictive Coding produces an output that is more stable over time, which is a form of

Temporal Pooling as proposed by Numenta.

To summarize, the computational properties of the objective system are:

- Replace simultaneously active inputs with a smaller set of active cells representing particular sub-patterns, and

- Replace predictable sequences of active cells with a false-negative error coding to transform the output into a simpler sequence of prediction errors

These functions will achieve the stated purpose of

incrementally transforming input data into simpler forms with accumulating invariances, while propagating (rather than hiding) errors, for further analysis in other Columns or cortical regions. In combination with a tree-like hierarchical structure, higher Columns will process data with increasing breadth and stability over time and space.

The Feed-Forward direct pathway is not filtered by the Thalamus. This means that Columns always have access to the state of objective system pyramidal cells in lower columns. This could explain the phenomenon that we can process data without being aware of it (aka “

Blindsight”); essentially the objective system alone does not cause conscious attention. This is a very useful quality, because it means the data required to trigger a change in attention is available throughout the cortex. The

“access” phenomenon is well documented and rather mysterious; the organisation of the cortex into objective and subjective systems could explain it.

Another purpose of the objective system is to ensure internal state cannot become detached from reality. This can easily occur in

graphical models, when

cycles form that exclude external influence. To prevent this, we believe that the roles of feed-forward and feed-back input must be separated to break the cycles. However, C2/3 pyramidal cells’ dendrites receive both feed-forward (from C4) and feed-back input (via C1).

One way that this problem might be avoided is by different treatment of feed-forward and feed-back input, so that the latter can be discounted when it is contradicted by feed-forward information. There is evidence that

feed-forward and feedback signals are differently encoded, which would make this distinction possible.

We speculate that the set of states represented by the cells in C2/3 could be defined only using feed-forward input, and that the purpose of feedback data in the objective system is restricted to improved prediction, because feedback contains state information from a larger part of the hierarchy (see figure 2).

|

| Figure 2: The benefit of feedback. This figure shows part of a hierarchy. The hierarchy structure is defined by the receptive fields of the columns (shown as lines between cylinders, left). Each Column has receptive fields of similar size. Moving up the hierarchy, Columns receive increasingly abstract input with a greater scope, being at the top of a pyramid of lower Columns whose receptive fields collectively cover a much larger area of input. Feedback has the opposite effect, summarizing a much larger set of Column states from elsewhere and higher in the hierarchy. Of course there is information loss during these transfers, but all data is fully represented somewhere in the hierarchy. |

So although the objective system makes use of feedback, the hierarchy it defines should be predominantly determined by feed-forward information. The feed-forward direct pathway (see figure 3) enables the propagation of this data and consequently the formation of the hierarchy.

|

| Figure 3: Feed-Forward Direct pathway within our canonical cortical micro-circuit. Data travels from C4 to C2/3 and then to C4 in a higher Column. This pattern is repeated up the hierarchy. This pathway is not filtered by the Thalamus or any other central structure, and note that it is largely uni-directional (except for feedback to improve prediction accuracy). We propose this pathway implements the Objective System, which aims to construct a hierarchical generative model of the world and the agent within it. |

Subjective System

We think that the subjective system is a selectively filtered model of both external and internal state including filtered predictions of future events. We propose that filtering of input constitutes selective attention, whereas filtering of predictions constitutes action selection and intent. So, the system is a subjective model of reality, rather than an objective one, and it is used for both perception and planning simultaneously.

The time span encompassed by the system includes a subset of both present and future event-concepts, but as with the objective system, this may represent a long period of real-world time, depending on the abstraction of the events (for example, “now” I am going to work, and “next” I will check my email [in 1 hour’s time]).

It makes good sense to have two parallel systems, one filtered (subjective) and one not (objective). Filtering external state reduces distraction and enhances focus and continuity. Filtering of future predictions allows selected actions to be maintained and pursued effectively, to achieve goals.

In addition to events the agent can control, it is important to be aware of negative outcomes outside the agent’s control. Therefore the state of the subjective system must include events with both positive and negative reward outcomes. There is a big difference between a subjective model and a goal-oriented planning model. The subjective system should represent all outcomes, but preferentially select positive outcomes for execution.

The subjective system represents potential future states, both internal and external. It does not necessarily represent reality; it represents a biased interpretation of intended or expected outcomes based on a biased interpretation of current reality! These biases and omissions are useful; they provide the ability to “imagine’ future events by serially “predicting” a pruned tree of potential futures.

More speculatively, differences between the subjective and objective systems may be the cause of phenomena such as selective awareness and

“access” consciousness.

|

| Figure 4: Feed-Forward Indirect pathway, particularly involved in the Subjective system due to its influence on C5. The Thalamus is involved in this pathway, and is believed to have a gating or filtering effect. Data flows from the Thalamus to C4, to C2/3, to C5 and then to a different Thalamic nuclei that serves as the input gateway to another cortical Column in a different region of the Cortex. We propose that the Feed-Forward Indirect pathway is a major component of the subjective system. |

|

| Figure 5: The inhibitory micro-circuit, which we suggest makes the subjective system subjective! The red highlight shows how the Thalamus controls activity in C5 by activating inhibitory cells in C4. The circuit is completed by C5 pyramidal cells driving C6 cells that modulate the activity of the same Thalamic nuclei that selectively activate C5. |

The subjective system primarily comprises C5 (where subjective states are represented) and the Thalamus (which controls subjectivity), but it draws input from the objective system via C2/3. The latter provides context and defines the role and scope (within the hierarchy) of C5 cells in a particular column. Between each cortical region (and therefore every hierarchy level), input to the subjective system is filtered by the Thalamus (figure 5). This implements the selection process. The Feed-Forward Indirect pathway includes these Thalamo-Cortical loops.

We suggest the Thalamus implements selection within C5 using special cells in C4 that are activated by axons (outputs) from the Thalamus (see figure 6). These inhibitory C4 cells target C5 pyramidal cells and inhibit them from becoming active. Therefore, thalamic axons are both informative (“this selection has been made”) and executive (the axon drives inhibition of selected C5 pyramidal cells).

|

| Figure 6: Thalamocortical axons (afferents) are shown driving inhibitory cells in C4 (leftmost green cell) that in turn inhibit pyramidal cells in C5 (red). They also provide information about these selections to other layers, including C2/3. When a selection has been made, it becomes objective rather than subjective, hence provision of a copy to C2/3. Image source. |

Note that selection may be a process of selective dis-inhibition rather than direct control: Selection alone may not be enough to activate the C5 cells. Instead, C5 pyramidal cells likely require both selection by the Thalamus, and feed-forward activation via input from C2/3. The feed-forward activation could occur anywhere within a window of time in which the C5 cell is “selected”. This would relax timing requirements on the selection task, making control easier; you only need to ensure that the desired C5 cell is disinhibited when the right contextual information arrives from other sources (such as C2/3). This also ensures C5 cell activation fits into its expected sequence of events and doesn’t occur without the right prior context.

C5 also benefits from informational feedback from higher regions and neighbouring cells that help to define unique contexts for the activation of each cell.

We suggest that C5 pyramidal cells are similar to C2/3 pyramidal cells but with some differences in the way the cells become active. Whereas C2/3 cells require both matching input via the apical dendrites and valid historical input to the basal dendrites to become active, C5 cells additionally need to be disinhibited for full activation to occur.

As mentioned in the

previous article, output from C5 cells sometimes drives motors very directly, so full activation of C5 cells may immediately result in physical actions. We can consider C5 to be the “output” layer of the cortex. This makes sense if the representation within C5 includes selected future states.

Management of C5 activity will require a lot of inhibition; we would expect most of the input connections to C5 to be inhibitory because in every context, for every potential outcome, there are many alternative outcomes that must be inhibited (ignored). At any given time, only a sparse set of C5 cells would be fully active, but many more would be potentially-active (available for selection).

Given predictive encoding and filtering inhibition, it would be common for few pyramidal cells to be active in a Column at any time. Separately, we would expect objective C2/3 pyramidal activity to be more consistent and repeatable than subjective C5 pyramidal activity, given a constant external stimulus.

Executive System

So far we have defined a mechanism for generating a hierarchical representation and a mechanism for selectively filtering activity within that representation. In our original

conceptual look at general intelligence, we also desired that filtering predictions would be equivalent to action selection. But if we have selected predictions of future actions at various levels of abstraction within the hierarchy, how can we make these abstract prediction-actions actually happen?

The purpose of the executive system is to execute hierarchical plans reliably. As previously discussed, this is no trivial matter due to problems such as

vanishing agency at higher hierarchy levels. If a potential future outcome represented within the subjective system is selected for action, the job of the executive system is to make it occur.

We know that we want abstract concepts at high levels within the hierarchy to be faithfully translated into their equivalent patterns of activity at lower levels. Moving towards more concrete forms would result in increasing activity as the incremental dimensionality reduction of the feed-forward hierarchy is reversed.

|

| Figure 7: Differences in dominant direction of data flow between objective and executive systems. Whereas the Objective system builds increasingly abstract concepts of greater breadth, the Executive system is concerned with decomposing these concepts into their many constituent parts. so that hierarchically-represented plans can be executed. |

We also know that we need to

actively prioritize execution of a high level plan over local prediction / action candidates in lower levels. So, we are looking for a cascade of activity from higher hierarchy levels to lower ones.

|

| Figure 8: One of two Feed-Back direct pathways. This pathway may well be involved in cascading control activity down the hierarchy towards sensors and motors. Activity propagates from C6 to C6 directly; C6 modulates the activity of local C5 cells and relevant Thalamic nuclei that activate local C5 cells by selective disinhibition in conjunction with matching contextual information from C2/3. |

It turns out that such a system does exist: The feed-back direct pathway from C6 to C6. Cortex layer 6 is directly connected to Cortex layer 6 in the hierarchy levels immediately below. What’s more, these connections are direct, i.e. unfiltered (which is necessary to avoid the vanishing agency problem). Note that C5 (the subjective system) is still the output of the Cortex, particularly in motor areas. C6 must modulate the activity of cells in C5, biasing C5 to particular predictions (selections) and thereby implementing a cascading abstract plan. Finally, C6 also modulates the activity of Thalamic nuclei that are responsible for disinhibiting local C5 cells. This is obviously necessary to ensure that the Thalamus doesn’t override or interfere with the execution of a cascading plan already selected at a higher level of abstraction.

Our theory is that ideally, all selections originate centrally (e.g. in the Thalamus). When C5 cells are disinhibited and then become predicted, an associated set of local C6 cells is triggered to make these C5 predictions become reality.

These C6 cells have a number of modulatory outputs to achieve this goal:

Executive Training

No, this is not a personal development course for CEOs. This section checks whether C6 cells can learn to replay specific action sequences via C5 activity. This is an essential feature of our interpretation, because only C6 cells participate in a direct, modulatory feedback pathway.

We propose that C6 pyramidal neurons are taught by historical activity in the subjective system. Patterns of subjective activity become available as “stored procedures” (sequences of disinhibition and excitatory outputs) within C6.

Let’s start by assuming that C6 pyramidal cells have similar functionality to C2/3 and C5 pyramidal cells, due to their common morphhology. Assume that C5 cells in motor areas are direct outputs, and when active will cause the agent to take actions without any further opportunity for suppression or inhibition (

see previous article).

In other cortical areas, we assume that the role of C5 cells is to trigger more abstract “plans” that will be incrementally translated into activity in motor areas, and therefore will also become actions performed by the agent.

To hierarchically compose more abstract action sequences from simpler ones, we need activity of an abstract C5 cell to trigger a sequence of activity in more concrete C5 cells. C6 cells will be responsible for linking these C5 cells. So, activating a C6 cell should trigger a replay of a sequence of C5 cell activity in a lower Column. How can C6 cells learn which sequences to trigger, and how can these sequences be interpreted correctly by C6 cells in higher hierarchy levels?

C6 pyramidal cells are

mostly oriented with their dendrites pointing towards the more superficial cortex layers C1,...,C5 and their axons emerging from the opposite end. Activity from C5 to C6 is transferred via axons from C5 synapsing with dendrites from C6. Given

a particular model of pyramidal cell learning rules, C6 pyramidal cells will come to recognize patterns of simultaneous C5 activity in a specific sequential context, and C6 interneurons will ensure that unique sets of C6 pyramidal cells respond in each context.

So how will these C6 cells learn to trigger sequences of C5 cells? We know that the axons of C6 cells

bend around and

reach up into C5, down to the Thalamus and directly to hierarchically-lower C6 cells. At all targets they can be

excitatory or inhibitory.

All we need beyond this, is for C6 axons to seek out axon target cells that become active immediately after the originating C6 cell is stimulated by active C5 cells. This will cause each C6 cell to trigger C5 and C6 cells that are observed to be activated afterwards. Note that we require the C6 cells themselves be organised into sequences (technically, a graph of transitions).

Target seeking by axons is known as “

Axon Guidance” and C6 pyramidal cells’ axons do seem to

target electrically active cells by ceasing growth when activity is detected. We have not yet found biological evidence for the predicted timing.

C6 axons can also target C4 inhibitory cells (

evidence) and

Thalamic cells, which again is compatible with our interpretation, as long as they are cells that become active after the originating C6 cell. If we want to “replay” some activity that followed a particular C6 cell, then all the cells described above should be excited or inhibited to ensure that the same events occur again. Activating a C6 cell directly should reproduce the same outcome as incidental activation of the C6 cell via C5 - a chain of sequential inhibition and promotion will result. Note that the same learning rule could work to discover all axon targets mentioned.

Collectively, the C6 cells within a Column will become a repertoire of “stored procedures” that can be triggered and replayed by a cascade of activity from higher in the hierarchy or by direct selection via C5. C6 cells would behave the same way whether activated by local C5 cells, or by C6 cells in the hierarchy level above. This allows cascading, incremental execution of hierarchical plans.

C6 cells do not need to replace sequences of C5 cell activity with a single C6 cell (i.e. label replacement for symbolic encoding), but they do need to collectively encode transitions between chains of C5 cells, individually trigger at least 1 C5 cell and collectively allow a single C6 cell to trigger a sequence of C6 cells in both the current and lower hierarchy regions.

C6 interneurons can resolve conflicts when multiple C6 triggers coincide within a column. We can expect C6 interneurons to inhibit competing C6 pyramidal cells until the winners are found, resulting in a locally consistent plan of action.

As with layers C2/3 and C5, C6 inhibitory interneurons will also support training C6 pyramidal cells for collective coverage of the space of observed inputs, in this case from C5 and C2/3.

Bootstrapping

Now we are only left with a

bootstrapping problem: How can the system develop itself? Specifically, how do the sequences of C5 activity come to be defined so that they can be learned by C6?

We suggest that conscious choice of behaviour via the Thalamus is used to build the hierarchical repertoire from simple primitive actions to increasingly sophisticated sequences of fine control. Initially, thalamic filtering of C5 state would be used to control motor outputs directly, without the involvement of C6. Deliberate practice and repetition would provide the training for C6 cells to learn to encode particular sequences of behaviour, making them part of the repertoire available to C6 cells in hierarchically “higher” Columns.

Initially, concentration is needed to perform actions via direct C5 selections; these activities need to be carefully centrally coordinated using selective attention. However, when C6 has learnt to encode these sequences, they become both more reliable and require less effort to execute, requiring only a trigger to one C6 cell.

After training, only minimal thalamic interventions are needed to execute complex sequences of behaviour learned by C6 cells. Innovation can continue by combining procedures encoded by C6 with interventions via the Thalamus, that can still excite or inhibit C5 cells. However, in most other cases C6 training is accelerated by the independence of Columns: When a C6 cell learns to control other cells within the Column, this learning remains valid no matter how many higher hierarchy levels are placed on top. By analogy, once you’ve learned to drink from a cup, you don’t need to relearn that skill to drink in restaurants, at home, at work etc.

As C6 learns and starts to play a role in the actions and internal state of the agent, it becomes important to provide the state of C6 to the objective and subjective systems as contextual input.

Axons from C6 to other, hierarchically lower Columns take two paths: To C6, and to C1. We propose that the copy provided to C1 is used as informational feedback in C2/3 and C5 pyramidal cells (

these axons synapse with Pyramidal cell Apical dendrites). We suggest the copy to C6 allows C6 cells to execute plans hierarchically, by delegating execution to a number of more concrete C6 cells. Therefore, the feedback direct pathway from C6 to C6 is part of the executive system. These axons should synapse on cell bodies, or nearby, to inhibit or trigger C6 activation artificially (rather than via C5).

Interpretation of the Thalamus

Rather than as merely a

relay, we propose that a better concept of the Thalamus is as a control centre. It’s job is to centrally control cortical activity in C5 (the subjective system). Abstract activity in C5 is propagated down the hierarchy by C6, and translated into its concrete component states, eventually resulting in specific motor actions. Therefore, via this feedback pathway the filtering performed by the Thalamus assumes an executive role also.

We believe that filtering predictions of oneself performing an action or experiencing a reward is the

mechanism by which objectives and plans are selected. We believe there is only one representation of the world in our heads. There is no separate “goal-oriented” or “action-based” representation. This means that filtering predictions is the mechanism of behaviour generation. Note that in a hierarchical system, you can simultaneously select novel combinations of predictions to achieve innovation without changing the hierarchical model.

Our interpretation of the Thalamus depends on some theoretical assumptions about how general intelligence works. Crucially, we believe there is no difference between selective awareness of externally-caused and self-generated events, except some of the latter have

agency in the real world via the agent’s actions. This means that selective attention and action selection can both be consequences of the same subjective modelling process.

But where does selection actually occur?

For a number of

practical reasons, action and attentional selection should be centralized functions. For one thing, the reward criteria for selecting actions are of much smaller dimension than the cortical representations - for example, the set of possible pain sensations are far more limited than the potential external causes of pain. We essentially need to compare the reward of all potential actions against each other, rather than an absolute scale.

It is also important that conflicts between items competing for attention or execution are resolved so that incompatible plans are replaced by a single clear choice. Conflict resolution is difficult to do in a highly parallel & distributed system; instead, it is preferable to force all alternatives to compete against each other until a few clear winners are found.

Finally, once an action or attentional target is selected, it should be maintained for a long period (if still relevant), to avoid vacillation. (See Scholarpedia for a good introduction to the

difficulties of conflict resolution and the importance of sticking to a decision for long enough to evaluate it).

We believe the Thalamus plays this role via its interactions with the Cortex. It interacts with the Cortex in two ways. First, the Thalamus selectively dis-inhibits particular C5 cells, allowing them to become active when the right circumstances are later observed objectively (i.e. via C2/3, which is not subjective).

Second, the Thalamus must also co-operate with the Feed-Back cascade via C6. While the Thalamus generates new selections by controlling C5, it must also permit the execution of existing, more abstract Thalamic selections by allowing cascading feedback activity to override local selections. Together, these mechanisms ensure that execution of abstract plans is as easily accomplished as simpler, concrete actions.

Interpretation of the Basal Ganglia

The

Basal Ganglia are involved in so many distinct functions that they can’t be fully described within this article. They consist of a set of discrete structures located adjacent to the Thalamus.

In our model, selection is implemented by the Thalamus manipulating the subjective system within the Cortex. We propose that the selections themselves are generated by the Basal Ganglia, which then controls the behaviour of the Thalamus.

Crucially, we believe the Striatum within the Basal Ganglia uses reward values (such as pleasure and pain) to make adaptive selections. In other words, the Basal Ganglia are responsible for picking good actions, biasing the entire Thalamo-Cortical system towards futures that are expected to be more pleasant for the agent.

However, to make adaptive choices it is necessary to have accurate context and predictions (candidate actions). The hierarchical model defined within the Cortex is an efficient and powerful source for this data, and in fact, this pathway (Cortex → Basal Ganglia → Thalamus → Cortex) does exist within the brain (see figure 9 below).

Thanks to studies of relevant disorders such as Parkinson’s and Huntingdon’s, it is known that this pathway is

associated with behaviour initiation and selection based on adaptive criteria.

|

| Figure 9: Pathways forming a circuit from Cortex to Basal Ganglia to Thalamus and back to Cortex. Image source. |

Lifecycle of an idea

Using our interpretation of biological general intelligence, we can follow the lifecycle of an idea from the conception to execution. Lets walk through the theorized response to a stimulus, resulting in an action.

Although the brain is operating constantly and asynchronously, we can define the start of our idea as some sensory data that arrives at the visual cortex. In this example, it’s an image of an ice-cream in a shop.

Objective Modelling

Sensor data propagates unfiltered up the Feed-Forward Direct pathway, activating cells in C4 and C2/3 in numerous cortical areas as it is transformed into its hierarchical form. The visual stimuli become a rich network of associated concepts, including predictions of near-future outcomes, such as experiencing the taste of ice-cream. These concepts represent an objective external reality and are now active and available for attention.

Subjective Prediction

Activity within the Objective system triggers activity in the Subjective system. Some C5 cells become “predicted”, but are inhibited by the Thalamus. These cells represent potential future actions and outcomes. Things that, from experience, we know are likely to occur after the current situation.

The Cortex projects data from C2/3 to the Striatum where it is weighted according to reward criteria. A strong response to the flavour of the frozen treat percolates through the Basal Ganglia and manipulates the activity of the Thalamus.

Between the Thalamus and the Cortex, an iterative negotiation takes place resulting in the selection (via dis-inhibition) of some C5 cells. The Basal Ganglia have learned which manipulations of the Thalamus maximize the expected Reward given the current input from Cortex.

The way that the Thalamus stimulates particular C5 cells is somewhat indirect. The path of activity to “select” C5 cells in layer n is C5[n-1] → Thalamus → C4[n] → C5[n]. The signal is re-interpreted at each stage of this pipeline - that is, connections do not carry a specific meaning from point to point. Therefore, you can’t just adjust one “wire” to trigger a particular C5 cell. Rather, you must adjust the inhibition of input to many C4 → C5 cells until you’ve achieved the conditions to “select” a target C5 cell. Many target C5 cells might be simultaneously selected.

In addition to requiring disinhibition, C5 cells also wait for specific patterns of cell activity in C2/3 prior to becoming “predicted”. This means that it’s very difficult to select a C5 cell that is not “predicted”; it simply doesn’t have the support to out-compete its neighbours in the column and become “selected”. This prevents unrealistic outcomes being “selected”, or output commencing, before the right circumstances have arrived to match the expectation.

Eventually, a subset of C5 cells become “predicted” and “selected”, representing a subjective model of potential futures for the agent in the world. In this case, the anticipated future involves eating ice-cream.

Execution

When C5 cells become active, they in turn drive C6 pyramidal cells that are responsible for causing the future represented by “contextual, selected & predicted” C5 cells. In this case, C6 cells are charged with executing the high-level plan to “buy some ice-cream and eat it”.

The plan is embodied by many C5 cells, distributed throughout the hierarchy; each represents a subset of the “

qualia” relating to the eating of ice-cream. C6 cells begin to interpret these C5 cells into concrete actions, via the C6-C6 Feed-Back Direct pathway. Crucially, they no longer require the Thalamus to modulate the input that makes C5 cells “selected”. Instead, C6 cells stimulate C5 and C6 cells in hierarchically-lower Columns directly, moving them to “selected” status and allowing them to become active as soon as the corresponding Feed-Forward evidence arrives to match.

C6 cells also modulate relay cells in the Thalamus, guiding the Thalamus to disinhibit C5 cells in lower hierarchy regions. This helps to ensure the parts of the decomposed plan are executed as intended. In turn, these newly selected “lower” C5 cells drive associated C6 cells, and the plan cascades down the hierarchy.

Note that the plan is also flowing in the “forward” direction, as it incrementally becomes reality rather than expectation. As motor actions take place, they are sensed and signalled through the Feed-Forward pathways. When C5 cells become “selected”, this information becomes available to higher columns in the hierarchy, if not filtered. This also helps the Feed-Forward Indirect pathway and C6 cells to keep track of activity and execute the plan in a coordinated manner.

At the lowest levels of the hierarchy, the plan becomes a sequence of motor activity, which is activated by C5 cells directly, and also by other brain components that are not covered by our general intelligence model.

A few moments later, the ice-cream is enjoyed, triggering a release of Dopamine into the Striatum and reinforcing the rewards associated with recent active Cortical input. Delicious!

Summary

In the previous articles we

explored the characteristics of a general intelligence and looked at some of the features we expected it to have. In

part 2 and

part 3 we reviewed some relevant computational neuroscience research. In this article we’ve described our interpretation of this background material.

We presented a model of general intelligence built from 3 interacting systems - Objective, Subjective and Executive. We described how these systems could learn and bootstrap via interaction with the world, and how they could be implemented by the anatomy of the brain. As an example, we traced an experience from sensation, through planning and to execution.

Let’s assume that our understanding of biology is approximately correct. We can use this as inspiration to build an artificial general intelligence with a similar architecture and test whether the systems behave as described in these articles.

The next article in this series will look specifically at how these concepts could be implemented in software, resulting in a system that behaves much like the one described here.